Google Cloud - Vertex AI Platform

Google Cloud AIGoogle Vertex AI is the unified artificial intelligence (AI) and machine learning (ML) platform from Google Cloud, designed to simplify the full lifecycle of data science models and projects. Its main proposition is to integrate in one environment the stages of data preparation, model training, evaluation, deployment, and monitoring, reducing the complexity of working with multiple isolated tools. Vertex AI combines Google’s scalable infrastructure with managed services, allowing technical teams to focus more on experimentation and less on administration.

The platform supports both custom models and pre-trained models, including state-of-the-art generative models. Thanks to its native integration with other Google Cloud services such as BigQuery, Dataproc, and Looker, it simplifies connections to data sources, large-scale analysis, and the agile creation of AI-based applications. Vertex AI also includes MLOps tools to manage traceability, versioning, and pipeline automation, reinforcing governance and compliance in enterprise environments.

A notable point is its focus on accessibility: Vertex AI offers an intuitive graphical interface for business users or analysts, while also exposing APIs and SDKs for more technical profiles. This enables different roles within an organization to collaborate on the same platform. The flexibility to work in a “low-code” mode or through advanced programming makes Vertex AI a versatile choice for companies seeking to accelerate AI adoption without sacrificing control or customization.

Main features of Google Vertex AI

AutoML

Vertex AI offers AutoML, a feature that allows users without advanced programming or machine learning knowledge to train custom models using their own data. With this tool, you can automatically create image classification, natural language processing (NLP), or tabular prediction models, optimizing architectures and parameters transparently. This speeds up AI adoption in companies with small teams or more business-oriented profiles.

Custom model training

In addition to AutoML, Vertex AI supports the training of advanced models developed with frameworks such as TensorFlow, PyTorch, or scikit-learn. The service manages the underlying infrastructure, enabling training at scale using GPUs and TPUs without requiring users to manage servers. This is essential for deep learning projects that demand high computing power.

MLOps and pipelines

With Vertex AI, teams can implement MLOps natively thanks to its pipeline system. These enable automation of tasks such as data preprocessing, training, validation, and model deployment. It includes dataset and model versioning, experiment tracking, and data drift control, ensuring traceability and governance for production projects.

Deployment and predictions

Once trained, a model can be deployed as a scalable endpoint within Vertex AI. This enables real-time or batch predictions, with automatic load balancing and performance monitoring. This capability shortens delivery times for enterprise applications requiring AI in production, such as recommendation systems, anomaly detection, or natural language processing.

Generative and pre-trained models

Vertex AI integrates access to generative and pre-trained models from Google, such as vision, language, and conversational models. This allows developers to directly use Generative AI APIs to build chatbots, automatic summaries, translation, or sentiment analysis applications without needing to train from scratch. This feature is key to accelerating the development of next-generation applications.

Integration with BigQuery and data sources

A key differentiator of Vertex AI is its native integration with BigQuery, which facilitates large-scale data extraction and transformation. It also connects with Dataflow, Dataproc, and other Google Cloud data sources, eliminating the typical friction in preparing datasets for training. This interoperability reduces preparation time and enables working with millions of records smoothly.

Graphical interface, APIs, and SDKs

Vertex AI combines an intuitive graphical interface for non-technical users with REST APIs and Python SDKs for developers. This creates a hybrid workspace where data scientists, analysts, and developers can collaborate on the same project. The flexibility to work in low-code mode or with advanced coding ensures that the platform adapts to different levels of AI maturity.

Monitoring and security

The platform includes continuous monitoring mechanisms for models in production, with alerts on performance deviations and data drift. In addition, it leverages Google Cloud’s security infrastructure, incorporating access controls, auditing, and compliance features. This is essential in regulated sectors such as finance or healthcare, where trust and control over models are mandatory.

Technical review of Vertex AI

Vertex AI is the unified Google Cloud platform designed to comprehensively cover the lifecycle of AI, Data Science, and Machine Learning projects. Within a single interface, it combines training tools (AutoML and custom training), deployment, and monitoring, along with access to Generative AI services and Google’s model catalog.

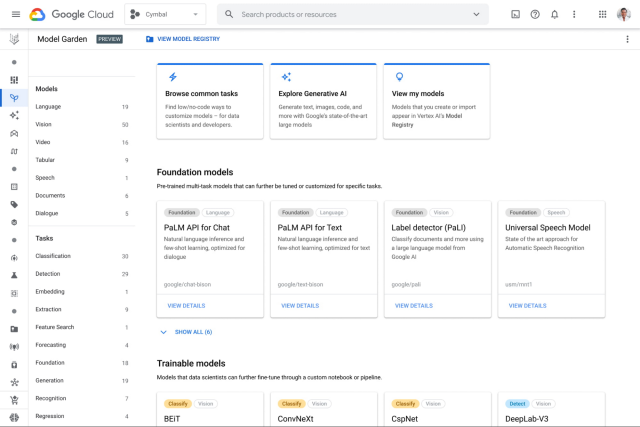

Architecturally, the proposal revolves around modules that facilitate model industrialization: Vertex AI Studio as a workspace, Model Registry for version control, Feature Store for centralizing and serving features both online and in batch, and Pipelines for orchestrating reproducible and auditable workflows. This combination allows artifact lineage tracking, experiment reproducibility, and consistency between training and serving.

In practice, Vertex AI offers two working paths: AutoML for teams seeking fast results without coding details, and custom training, which supports containers and SDKs for those needing fine control over architectures and optimizations. The platform includes SDKs (for example, for Python) and support for popular frameworks, making it easier to port existing workloads and scale training with managed resources.

Its orchestration and governance capabilities are among its strengths: Vertex AI Pipelines automate preparation, training, and validation phases, store metadata, and allow inspection of runs and artifacts — helping to implement reproducible, auditable MLOps practices. This is particularly valuable in regulated environments where traceability must be demonstrated.

For production operations, the platform incorporates monitoring (data drift, metric degradation), serving both in real time and batch, and explainability tools that make it easier to analyze model decisions. This layer reduces the risk of unnoticed “drift” and speeds up the detection of regressions after deployment.

In the Generative AI and LLMs field, Vertex AI exposes the Model Garden and supports multimodal models such as Gemini, while also incorporating Google’s latest specialized models for vision, text, and code. Recently, video generation capabilities (Veo 3 / Veo 3 Fast models) and third-party integrations have been added to expand the model offering, making the platform a competitive choice for large-scale content generation projects.

At the integration level, Vertex AI connects natively with BigQuery, Cloud Storage, IAM, and VPC networks, making it easy to integrate pipelines that process large-scale data and maintain centralized access controls. It also supports external libraries and ecosystems (for example, integration with Ray for training scalability), simplifying the migration of workloads from distributed architectures.

However, there are some trade-offs. Heavy adoption can imply vendor lock-in due to dependency on APIs, formats, and managed services; the cost structure can be complex for projects with intensive training and frequent deployments; and the learning curve for mastering pipelines, security, and cost optimization requires talent investment. For organizations with strict on-premise or ultra-low latency requirements, some flexibility is reduced compared to hybrid or self-hosted solutions.

In summary, Vertex AI provides a mature stack to accelerate the transition from prototypes to production through its GCP integration, MLOps capabilities, and access to advanced generative models. It’s recommended for teams prioritizing scaling projects with operational control and leveraging managed models; less suitable if avoiding vendor dependency or maintaining full infrastructure control is the priority. For technical teams that value traceability, automation, and access to state-of-the-art models, Vertex AI is a robust platform for deploying enterprise AI.

Summary table of strengths and weaknesses of Google Vertex AI

| Aspect | Strengths | Weaknesses |

|---|---|---|

| Integration | Native connection with BigQuery, Dataproc, Dataflow, Looker | Strong dependency on the Google Cloud ecosystem; less flexible in multicloud/hybrid scenarios |

| Platform | Unified environment: data, training, deployment, and monitoring in one place | Initial complexity: steep learning curve for new Google Cloud teams |

| Performance | Scalability with TPUs and GPUs; optimized Google infrastructure | High costs for intensive training or large data volumes |

| MLOps | Pipelines, versioning, experiment management, drift monitoring | Requires technical expertise to fully leverage advanced features |

| Models | Support for AutoML, generative, and pre-trained models | Smaller catalog compared to open repositories like Hugging Face |

| Usability | Combines low-code/no-code interface with APIs and SDKs for developers | Less mature external community compared to open-source ecosystems |

Licensing and installation

Regarding licensing, Vertex AI uses a pay-as-you-go model integrated into Google Cloud subscriptions. As for company size, it is ideal for medium and large enterprises requiring scalability and advanced resources, although it also provides accessible options for SMBs.

In terms of installation type, it is fully cloud-managed, with no on-premise version, though it offers SDKs for hybrid environments.

Frequently Asked Questions about Vertex AI

What is Vertex AI?

Summary: Google Cloud platform for designing, training, deploying, and monitoring AI models at scale.

Vertex AI centralizes MLOps, AutoML, and custom training tools, connecting services like BigQuery, Cloud Storage, and IAM to simplify model production in enterprise environments.

What is Vertex AI used for?

Summary: To transform data into deployable models that generate predictions and AI applications.

It is used throughout the entire cycle: data ingestion and labeling, experimentation, feature management (Feature Store), pipeline orchestration, real-time serving, and drift and performance monitoring.

Does Vertex AI include AutoML?

Summary: Yes: it provides AutoML paths to create models without writing advanced code.

AutoML in Vertex AI helps train models for classification, regression, and vision, with managed pipelines and automatic hyperparameter tuning—ideal for teams with limited ML specialization.

What frameworks and languages does it support?

Summary: Native support for TensorFlow, PyTorch, and Python SDKs; allows custom containers.

Users can run their own code in Docker containers, use Vertex Python SDKs, or integrate external frameworks (e.g., Ray) for distributed scaling and custom training jobs.

How does Vertex AI compare to AWS SageMaker or Azure ML?

Summary: A direct competitor: similar in functionality, with advantages in GCP integration and Google model access.

The choice depends on the dominant corporate cloud, integration requirements (BigQuery vs S3/Azure Data Lake), tolerance for vendor lock-in, and pricing and managed service factors.

How much does it cost to use Vertex AI?

Summary: Costs vary: charges apply for training, serving, storage, and managed services.

Billing combines compute rates (CPUs/GPUs/TPUs), storage, traffic, and managed features (Feature Store, Pipelines). It’s advisable to estimate training and serving workloads and monitor budgets and alerts.

Does Vertex AI cause vendor lock-in?

Summary: There is some risk of vendor lock-in due to dependencies on GCP APIs and services.

Using open formats, custom containers, and separating data (e.g., BigQuery vs exports) reduces coupling, but proprietary features (Model Garden, management APIs) increase Google Cloud dependency.