Databricks Data Intelligence Platform

Databricks Data Intelligence PlatformDatabricks is a unified data platform designed to simplify and accelerate AI, Data Science, and Machine Learning projects in enterprise environments. Its main goal is to provide a collaborative workspace where data scientists, data engineers, and analysts can work in an integrated way, eliminating silos and reducing operational complexity. The foundation of the solution is Apache Spark, which allows it to handle massive volumes of data with scalable cloud performance.

One of its key features is the Lakehouse, an approach that combines the best of Data Lakes and Data Warehouses, enabling both flexible storage of unstructured data and efficient management of structured data for advanced analytics. This allows organizations to centralize their data within a single environment and enable ETL, exploration, and modeling processes more efficiently. In addition, Databricks natively integrates collaborative notebooks, ML libraries, and connectivity with development environments and external tools such as MLflow, TensorFlow, or scikit-learn.

Another differentiating factor is its ability to manage the Machine Learning model lifecycle. The platform not only facilitates model training and validation but also experiment tracking, version management, and production deployment. This makes Databricks an end-to-end solution for the data science workflow, reducing friction between research and operations. Its cloud scalability approach (with support for AWS, Azure, and Google Cloud) positions it as an attractive option for companies requiring flexibility and power in advanced analytics projects.

Databricks Features

Lakehouse Architecture

The core functionality of Databricks is its Lakehouse architecture, which unifies in a single environment the advantages of both Data Lakes and Data Warehouses. This allows storage and processing of both structured and unstructured data, removing the need to maintain duplicate systems. Thanks to this convergence, organizations can centralize data storage, improve governance, and enable advanced analytics without constantly moving data across different platforms.

Collaborative Notebooks

Databricks offers cloud-based collaborative notebooks where teams of data scientists, engineers, and analysts can work in real time on the same project. These notebooks support multiple programming languages such as Python, R, SQL, and Scala, encouraging collaboration among diverse technical profiles. They also include integrated visualization tools, making it easier to explore data and quickly prototype models.

Machine Learning and MLOps

The platform integrates a complete environment for Machine Learning model development and its operationalization through MLOps. This includes distributed model training, hyperparameter optimization, and the use of external libraries such as TensorFlow, PyTorch, or scikit-learn. Through MLflow, Databricks enables detailed tracking of experiments, model versioning, and production deployment, shortening lifecycle times and improving reproducibility.

Data Processing at Scale

Based on Apache Spark, Databricks guarantees distributed processing capable of handling large data volumes with high performance. This capability makes it ideal for ETL (Extract, Transform, Load) workloads, data streaming, and batch processing. The engine can execute complex queries in seconds over petabytes of information, giving companies the ability to exploit massive datasets without relying on rigid or limited infrastructure.

Cloud Ecosystem Integration

Databricks is designed to run on major cloud platforms: AWS, Microsoft Azure, and Google Cloud Platform. This provides companies with flexibility in choosing a provider and allows them to leverage complementary services such as cloud storage, data orchestrators, or security and authentication services. Furthermore, its integration with external BI tools (like Power BI or Tableau) bridges the gap between advanced analytics and business decision-making.

Data Security and Governance

Another key aspect is data security and governance, with features such as row- and column-level access control, usage audits, and data encryption in transit and at rest. Through its Unity Catalog module, Databricks enables centralized management of data cataloging, traceability, and regulatory compliance (e.g., GDPR or HIPAA). This ensures data usage meets enterprise and regulatory standards.

Automation and Optimization

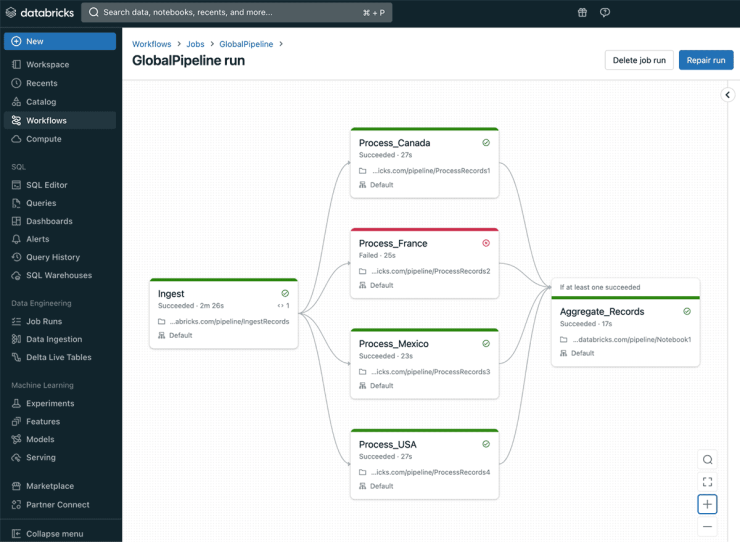

The platform includes automation tools for recurring tasks such as scheduling data pipelines or automatic SQL query optimization. It also features autoscaling capabilities that dynamically adjust computing resources based on workload. These features reduce operational costs and increase efficiency, enabling large-scale AI and analytics projects without wasting resources.

Technical Review of Databricks

Databricks is a data analytics and artificial intelligence platform focused on unifying Data Science, Machine Learning, and data management processes in a single environment. It targets organizations managing large data volumes that need to integrate distributed processing, real-time collaboration, and production model deployment with a high level of scalability.

The Lakehouse architecture combines the best of Data Lakes and Data Warehouses, offering a unified storage model that supports both structured and unstructured data. This approach reduces duplication, avoids system fragmentation, and enables faster data access. Additionally, the Delta Lake module ensures transactional consistency, version control, and reliability when handling critical data.

Collaborative notebooks are a core component for multidisciplinary teams. They allow programming in Python, R, SQL, and Scala, and integrate graphical libraries for data visualization. Real-time collaboration streamlines hypothesis validation, code debugging, and model prototyping, improving productivity in complex projects.

In the Machine Learning domain, Databricks offers native integration with MLflow, enabling experiment tracking, hyperparameter management, and model deployment across various execution environments. This accelerates the transition from lab to production and ensures reproducibility across full lifecycles.

The Apache Spark-based engine enables massive parallel processing for both streaming and batch workloads, with optimized response times even for petabyte-scale datasets. This makes Databricks a strategic tool for executing ETL, exploratory analysis, or large-scale model training.

Integration with leading cloud providers—AWS, Azure, and Google Cloud—ensures deployment flexibility, access to complementary ecosystems, and elasticity in resource consumption. Dynamic autoscaling adjusts infrastructure on demand, optimizing costs without compromising performance.

Regarding governance and security, the Unity Catalog centralizes metadata management, access control, and compliance with regulations such as GDPR or HIPAA. Encryption in transit and at rest, along with operation traceability, provides confidence for data-sensitive projects.

Strengths and Weaknesses of Databricks

| Strengths | Weaknesses |

|---|---|

| Lakehouse architecture unifying Data Lake and Data Warehouse. | Steep learning curve for new users. |

| Cloud scalability with support for AWS, Azure, and Google Cloud. | High usage costs in large-scale environments. |

| Distributed processing with Apache Spark for large data volumes. | Dependence on a robust cloud infrastructure. |

| Integration with Machine Learning and MLOps libraries and frameworks. | Less focus on traditional BI compared to platforms like Snowflake. |

| Collaborative notebooks for real-time teamwork. | Complex initial setup and advanced configuration. |

| Unity Catalog for data governance, security, and compliance. | Less appealing for small projects with low data volumes. |

Licensing and Installation

Databricks is offered under a subscription-based licensing model (pay-as-you-go or reserved instances), with a SaaS structure and consumption-based pricing options. It is designed for businesses of all sizes—from SMBs to large enterprises—and its installation type is fully cloud-managed (AWS, Azure, and Google Cloud) with multi-cloud capabilities. It has no native on-premise deployment but supports hybrid environments via connectors and virtual private networks.

Frequently Asked Questions about Databricks

What is Databricks? Databricks is a unified Lakehouse platform that integrates data lake, data warehouse, and distributed processing using Apache Spark.

Why choose Databricks for Data Science projects? Because it centralizes collaborative notebooks, supports multiple languages (Python, SQL, Scala, R), and fosters collaboration among technical teams.

What are Databricks’ main strengths? It unifies Data Lake and Data Warehouse through its Lakehouse architecture, offers elastic scalability on AWS, Azure, and GCP, enables distributed processing via Apache Spark, provides collaborative notebooks, and centralizes governance with Unity Catalog.

What are Databricks’ main weaknesses? It has a steep learning curve, potentially high costs in large deployments, depends on a robust cloud infrastructure, involves complex cluster management, and focuses more on AI/ML than on traditional BI.

How does it ensure scalability and performance? It offers cloud autoscaling and a distributed engine (Apache Spark) that processes big data both in real time and batch modes.

What Machine Learning components does it include? It includes MLflow for experiment management, Feature Store for feature management, and MLOps tools for model deployment and monitoring.

How does it manage data governance and security? Through Unity Catalog, which centralizes metadata, permissions, and ensures compliance with standards such as GDPR and HIPAA.

Which clouds are compatible with Databricks? It integrates natively with AWS, Azure, and Google Cloud Platform, enabling hybrid and multi-cloud deployments.

References

Official Databricks website: Databricks: Data and AI Solutions for Enterprises